HPC Is Right Now: The Opioid Crisis & Gordon Bell Prize-Winning Genomic Research at ORNL

Summit’s Capabilities Offer Problem-Solving Power of Which Dreams (and Science Innovations) Are Made

As high-performance computing systems continue solving even more complex problems at increasing scales, they also are enticing scientists to examine and challenge how the systems themselves operate. For Wayne Joubert and Daniel Jacobson, scientists at Oak Ridge National Laboratory, having the world’s fastest supercomputer at their doorstep afforded an opportunity to take on one of society’s most pressing issues, the opioid epidemic, while simultaneously getting a peek at what makes a system like Summit, with 4,608 GPU-accelerated compute nodes and peak performance of approximately 200 petaflops, run smarter and faster than any machine preceding it.

With team members from the University of Missouri-St. Louis, Yale University, Department of Veterans Affairs, University of Tennessee, and Department of Energy Joint Genome Institute, Joubert, an ORNL computational scientist, and Jacobson, ORNL’s chief scientist for computational systems biology, used the world-leading Summit to help untangle how genetic variants, gleaned from vast datasets, can impact whether an individual is susceptible (or not) to disease, including chronic pain and opioid addiction.

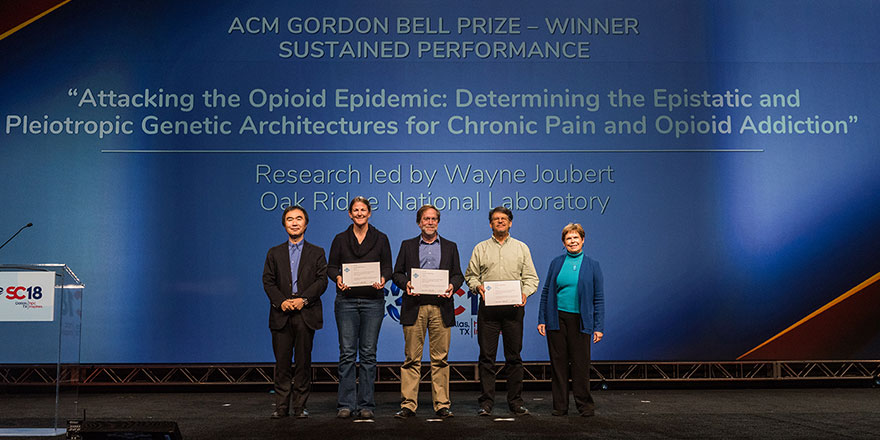

The team’s statistical and computational procedures, which involved creating a new GPU-optimized application for custom correlation coefficient (used in genomics to calculate interactions between genetic mutations across a population), resulted in the fastest-ever scientific calculation—2.36 exaflops on 99 percent of Summit—with almost five orders of magnitude improved performance compared to current state of the art. It also earned them a share of the 2018 Gordon Bell Prize, awarded for outstanding achievement in HPC that emphasizes innovation in applications to science, engineering, and large-scale data analytics.

Now, Joubert and Jacobson offer their perspectives about why their noteworthy work* has far-reaching implications for other domain sciences, how HPC will keep changing the way research is done, and the upside to winning the Gordon Bell Prize.

Q: What led you to using Summit’s compute power toward something like examining root causes of the opioid crisis, which seems as far away from HPC computing as you can get?

A: Biological systems are incredibly complex and the possible combinatorial interactions among the components of a human cell are on the order of 10170. Complex traits and behaviors, such as addiction, will involve the interactions of many components and, as such, are very challenging HPC problems. Thus, to better understand biological systems, the use of HPC is actually central to our efforts.

Q: It appears that many ideas for examining genetic architectures at the population scale have existed for some time. Thus, in addition to its sheer size, what else about the Summit system (and HPC) made this work viable right now?

A: Traditional approaches to genetics, be it Mendelian or traditional genome wide association studies, known as GWAS, make the assumption that genes/alleles operate independently. Organisms are highly interconnected systems, so this is a false premise for many traits. Epistasis, the idea that alleles (mutations) interact as sets to effect traits, is increasingly being shown to be the rule rather than the exception. However, testing for epistasis takes us to these huge combinatorial problems that have been computationally infeasible before—and, as such, a bit of an inconvenient truth in biology. The combination of new algorithm design with the power of Summit is finally allowing us to solve problems like this that we have been dreaming of for a long time. The tensor cores on Summit’s GPUs allowed us to unleash unprecedented amounts of compute power. The large memory footprint of each node allowed us to tackle a size of problems that we could not previously, the NVLink connection between CPU and GPU along with adaptive routing between nodes allowed use to parallelize and scale these problems incredibly efficiently, and the use of the burst buffers allowed us to minimize the impact of I/O (input/output).

Q: How was this genomics research a good way to assess Summit’s effectiveness/efficiency? And, to use car parlance, were you able to engage Summit with a “wide open throttle?”

A: The genomic problem provided a complex and challenging problem that required us to use the entirety of Summit for long runs. As such, we were indeed running Summit with a wide open throttle. The power-traces from these runs indicate that this application runs hotter than other applications on Summit and thus is really maximizing use of the available compute power.

Q: Your team’s work builds a distinct bridge between biology and computer science. In what ways do you expect domain and computer scientists’ work to evolve together when seeking to resolve grand challenge problems in applications beyond biology (e.g., environmental science or chemistry)?

A: We also work in areas of environmental science and the effects of chemistry on the environment. In fact, some of our most recent work has been targeted at understanding environments at a planetary scale. As such, I think we can attest to the continued power of the tight integration between domain and computational scientists.

Q: What innovations in both HPC and genomics were realized as a result of this work? Did anything surprise you along the way?

A: Ours was the first project to use tensor cores to break the exascale barrier and is still the fastest calculation ever achieved. The genomics results form a foundation for many of our ongoing studies so are part of many manuscripts now and in the future. In short, this is allowing us to make new scientific discoveries that we simply could not have done before. There are ongoing applications of this work in a broad range of fields, including, but not limited to, precision medicine, agriculture, bioenergy, and ecosystems research. One of the surprises has been unexpected patterns and biological signatures that these approaches are revealing, well beyond the expected results.

Q: What did it mean to your team to earn a share of the 2018 Gordon Bell Prize, and how does it further validate your research?

A: We are most excited about all of the new science that this work enables. I am particularly proud that we were the first group to break the exascale barrier and that we did it for biology, thus demonstrating the importance of supercomputing for complex biological problems. Receiving the Gordon Bell has been a nice validation of this high-end convergence between biology and supercomputing.

Q: Can you share any helpful hints for anyone who may be considering if her/his research merits a Gordon Bell Award submission?

A: Pick an incredibly challenging problem, map it to the right collection of compute architectures, start as far ahead of time as you can, plan for a lot of failures along the way, and work very, very hard.

Q: What does SC19’s theme, ‘HPC is Now’, mean to you?

A: The importance of HPC in an increasingly data-driven world is very apparent right now, not just sometime in the future.

*Read More: Joubert W, D Weighill, D Kainer, S Climer, A Justice, K Fagnan, and D Jacobson (2018). Attacking the Opioid Epidemic: Determining the Epistatic and Pleiotropic Genetic Architectures for Chronic Pain and Opioid Addiction. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis (SC’18), Article 57. Nov. 11-16, 2018, Dallas, Texas. IEEE Press, Piscataway, NJ.

–––

Charity Plata, SC19 Communications Team Writer (Brookhaven National Laboratory)

Charity Plata provides comprehensive editorial oversight to Brookhaven National Laboratory’s Computational Science Initiative. Her writing and editing career spans diverse industries, including publishing, architecture, civil engineering, and professional sports. Prior to joining Brookhaven Lab in 2018, she worked at Pacific Northwest National Laboratory primarily within the Advanced Computing, Mathematics and Data Division.